Week 3 Beta Testing at NYC Internet Yami-Ichi

In which we visit the internet black market and take some screenshots.

Preparation

I was tipped off to the existence of the NYC Internet Yami-Ichi by some colleagues from ITP Camp at NYU. The event began in Japan and is devoted to the extrapolation of everything “internet-ish” into the real world. Due to my interest in net art and utilizing online interactions as an artistic medium, I was immediately interested in participating. It also seemed like an excellent place for the first test of annotatAR in the wild.

I set the bar for the beta-test fairly low - the key here would be to see how and if people utilized the core functionality of tweets displayed on a real-time getUserMedia video feed. I deployed a test version of the app on Meteor’s test server. The Meteor platform proved itself useful in this regard: it was quite easy to deploy multiple versions from the same code base by simply changing the URL in the meteor deploy command.

annotatAR achieved the minimal viable test build plus a few extra features:

- geolocation (coordinates and accuracy) was displayed below the video feed - for testing purposes only

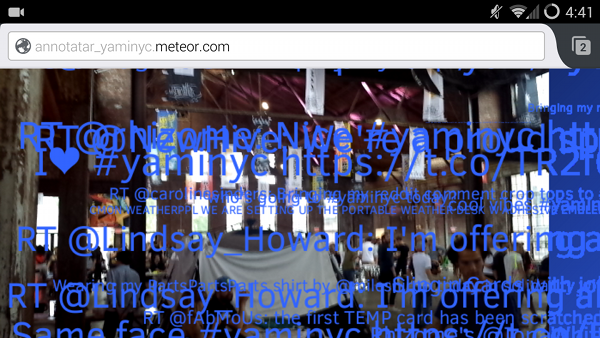

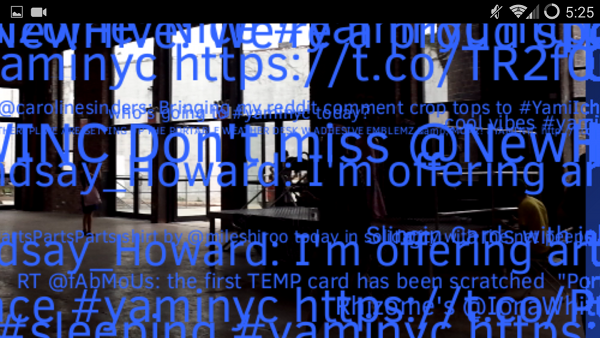

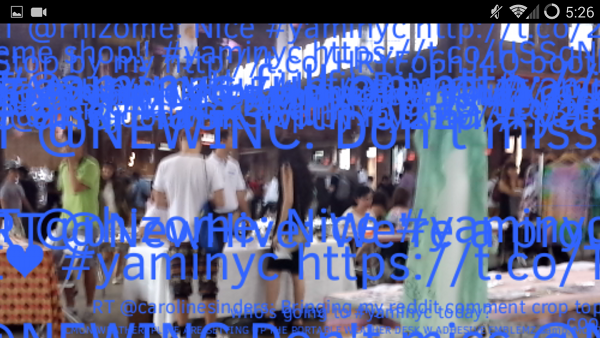

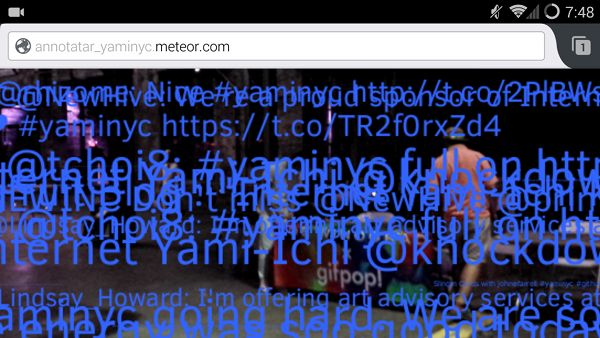

- tweet data was displayed in decresing font size - the age of the tweet was linearly mapped to the font scale - tweets appeared as 50px in size and decreased down to 5px over the course of 8 hours

- the databse stores the hashtag for the search that generates each the tweet

Survey

I also designed a brief user feedback survey and posted it on Google Forms. I also made a paper version (which is what I ended up utiliting in situ).

The survey captures whether or not the video and tweet functions work, information about th edevice & browser, and some open-ended questions.

Internet Yami-Ichi Deployment

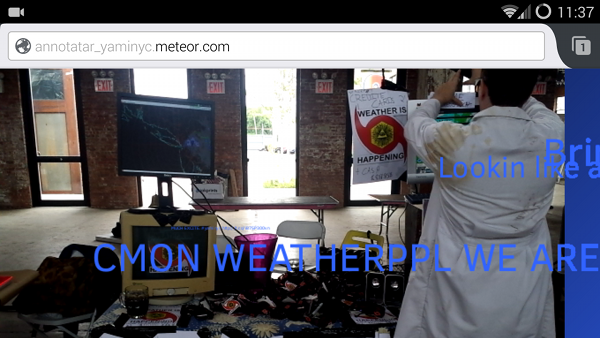

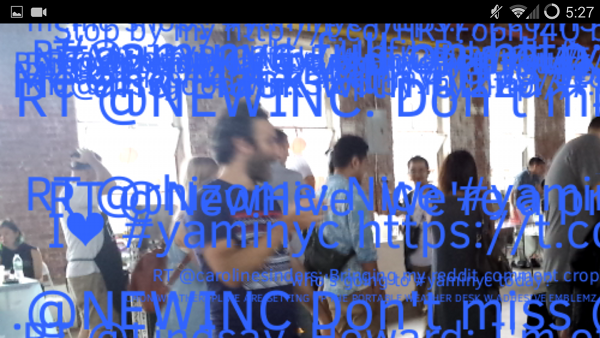

The #yaminyc app was deployed the night before the event, and targeted at the Twitter hashtag “#yaminyc” where a couple of folks had already begun to interact.

I acheived two separate user tests during the event. One was successful and generated some great feedback regarding next directions for the UI: a “selfie” mode, screencapture interface button, and an animated GIF generator. The second test was not as successful - it was an iPhone and it seemed that the phone did not ask the user for permission to connect to the getUserMedia device at all. This may be a significant issue going forward, if iPhone defaults interfere with this browser funcitonality.

I also took a number of screenshots at the event. It is quite an expressive way of documenting this type of gathering - one gets a sense of a crowd of virtual eavesdropping entities.

Lessons

I think that annotatAR was quite effective in achieving the union of virtual and real space in an expressive way.

I was a bit frustrated with the quality of the web camera picture, I wonder if anything can be done to improve it.

I’m also brainstorming about the next level of the project: the desktop site. Perhaps it should be a focal point for sharing screenshots, or perhaps the screenshots could be utilized in a larger work of some kind. I now have a databse of tweets from an event to experiment with, as a corpus for text analysis.

I’m a bit concerned about the failure of iOS to connect to the WebRTC protocol, which I was not expecting since it is supported by the versions of Firefox and Chrome that are on the iPhone device thtat was tested. I will address this in my upcoming post on scoping for compatibility and audience.